Overfitting in Product Design [10/100]

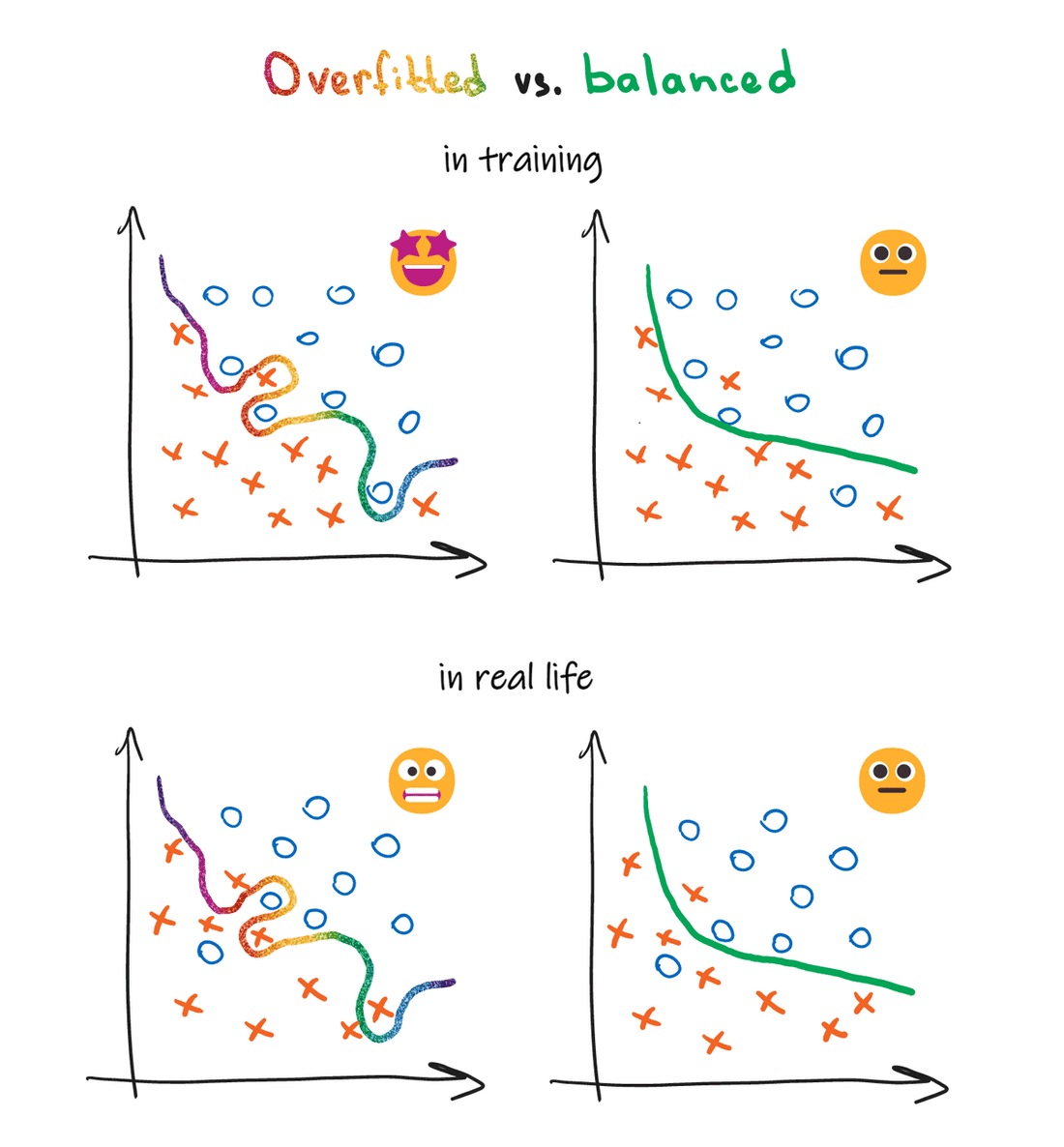

Overfitting is a concept from machine learning: it describes a model that works ridiculously well on the data it’s been trained on, but produces pitiful results in the real world.

Having gone through a few humiliating redesigns, I’d argue that the same concept applies to product development.

How can a product design be overfitted?

Here is a recent example:

- We got a ton of feedback on access management in Fibery, our collaboration software.

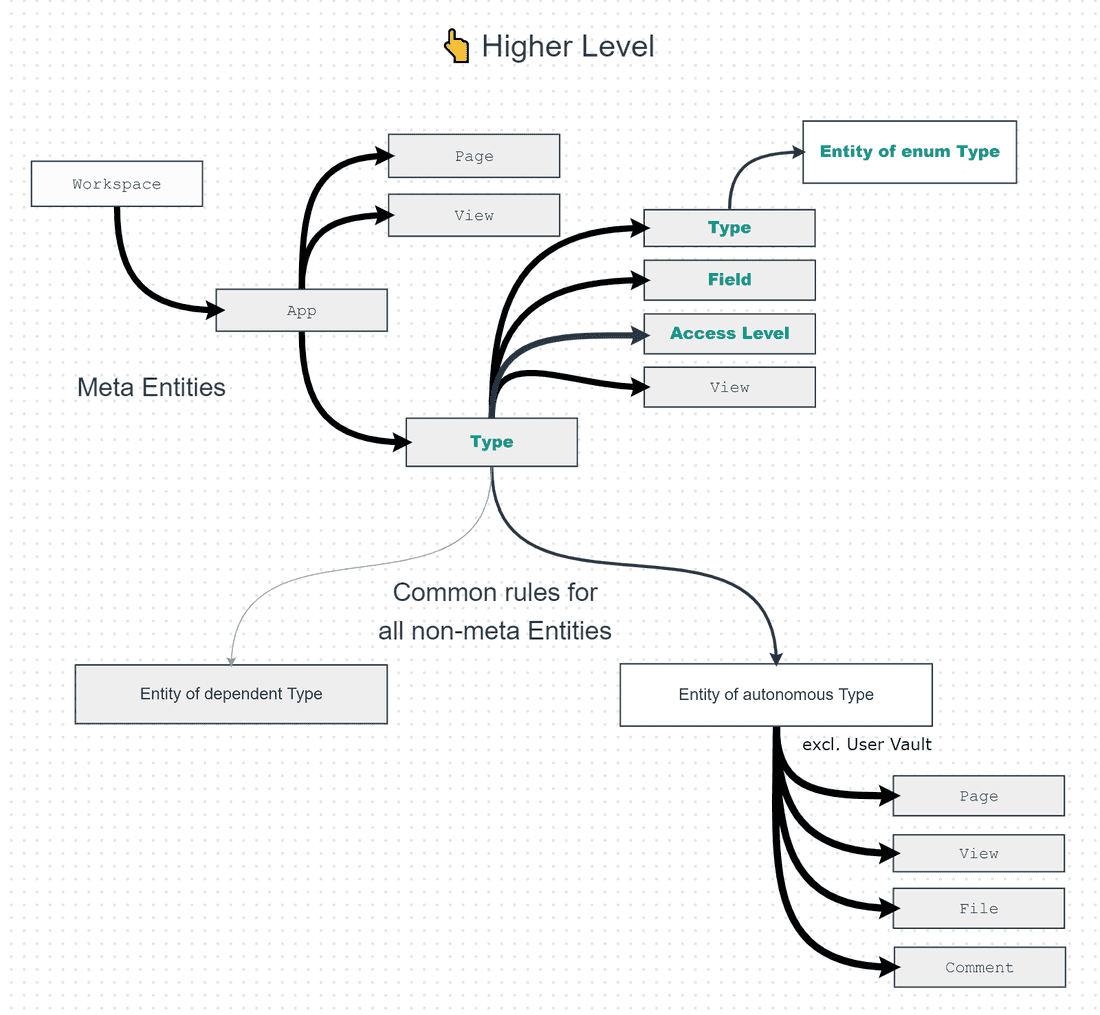

- I designed a juggernaut of a permissions model, incorporating every use case in existence (or so I thought).

- (Luckily), the implementation was postponed because developers were still busy with their previous feature.

- While the project was on hold, new use cases arrived: “how do I give access to all Features?”, “how would Forms work?“.

- The design wasn’t ready for these cases: we applied more and more gaffer tape fixes before discarding the model altogether and starting anew.

The analogy is clear: we overfitted our model (design) to the training data (use cases).

An overfitted product design ticks all the boxes and shines during early demos, so it’s easy to fall into this trap.

Unfortunately, it all goes downhill from here: implementation takes longer than expected and newly arriving use cases produce more and more features in the backlog. Once the thing goes into production, every new piece of feedback triggers a commit. The solution is a struggle to maintain and impossible to scale: an overfitted design can’t adapt.

How to avoid overfitting?

The risk of overfitting is highest when designing a complex product area on top of numerous and diverse feedback — think access management, payments, and automation.

Here are some 🚩 red flags to look for before a design goes to production:

- a lot of abstractions and terms,

- many exceptions and special cases,

- developers transferring to other teams after reading the spec 😅.

To prevent overfitting, we borrow and adapt techniques from data science: punishing model complexity and separating training and testing data sets.

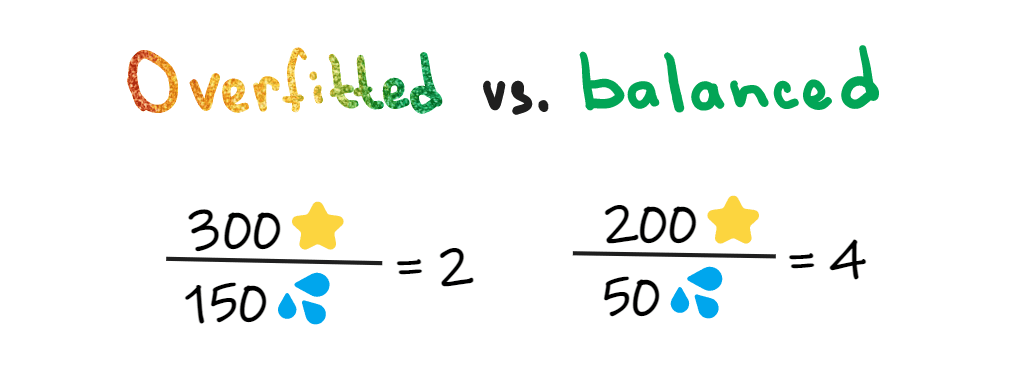

Punish complexity. When comparing design candidates, we prioritize solutions with maximum value-to-cost ratio. Value typically represents how well a solution solves use cases: +100 points for a recurring and well-understood case, +2 points for a weird lonely request. Cost, in our case, is complexity: +50 sweat drops for each new term (ex. “Document” is different from “Entity”), +20 sweat drops for every special case (ex. “User can delete anything they’ve created apart from Folders”). Super-duper intricate designs get a lot of points but soak in sweat giving way to simpler solutions.

☝️Engineering effort is usually too early to estimate at this point, but it correlates well with the design complexity.

Save use cases for later. Against our intuition, we randomly skip portions of product feedback before designing a feature — this is going to be our test data set. Alternatively, if there are too few use cases available, we read them all but schedule more customer interviews to validate the solution.

☝️The same method applies to outsource development as well: simply replace “feedback” with “requirements”.

With these techniques, we pick designs that look underwhelming at first but do not crumble later. A balanced design works well for scenarios we haven’t even considered.

P.S.

The concept of overfitting is shockingly universal.

This article focuses on product design simply because of SEO that’s what I pretend to know.

Here is some Richard Feynman for you:

When you have put a lot of ideas together to make an elaborate theory, you want to make sure, when explaining what it fits, that those things it fits are not just the things that gave you the idea for the theory; but that the finished theory makes something else come out right, in addition.

Psst... Wanna try Fibery? 👀

Infinitely flexible product discovery & development platform.