The ICE Prioritization Framework: Everything You Need to Know

Product management isn’t for sloths. Every day, you must make multiple decisions rapidly, with as much accuracy as possible.

This can get pretty hectic, especially when you don’t have the proper tools to help you.

Before you get all hot-headed, let us introduce you to ICE – a simple but brilliant prioritization tool that simplifies daily PM struggles.

Here’s what it’s all about and how to use it to sharpen your decision-making skills.

What does ICE stand for?

The name of the ICE Scoring Model stands for three numerical values you assign to a project:

- Impact: How much will a project influence your major goals?

- Confidence: How confident are you that the influence will happen?

- Ease: How hard will it be to complete the project?

Developed by Sean Ellis, famous for coining the term “growth hacking,” the ICE score is used for quick experimentation and iterative development in a messy dynamic startup environment.

The goal here is to score each item on the board and organize it by putting the most impactful and easiest-to-deliver initiatives on top.

The best part? You can apply all those scores to your project in a couple of minutes. Let’s take a closer look:

Calculating the ICE score

Scoring items using the ICE method consists of X steps:

First, take an item from the board and assign 0-10 points to each of all three values mentioned above and assign 0-10 points to each.

Then multiply all the scores – and your ICE score is done.

This formula explains the process best:

(Impact + Confidence + Ease)/3= ICE Score

This seems too easy, right? A few questions may immediately pop up in your head. For example, how do you score a project’s Impact if you’ve never done anything like this before? Or how much confidence should you have to score an item as a 3 or a 7?

This is where most Product Managers slip on the ICE score (pun intended). So, let’s dive deeper into each component and clarify how to use it:

Impact: Look at the key metric

Sean Ellis in “Hacking Growth” describes Impact as:

[…] the expectation about the degree to which the ideas will improve the metric being focused on

Source: “Hacking Growth”, S. Ellis

To measure the impact, you need to have a clear goal or key result in mind. Then, you analyze how much the project can influence this metric positively.

Say you’re building a budget management app and have three different feature ideas:

| Idea | Impact |

| Automated Expense Tracking and Categorization | 8 |

| Customizable Budget Creation with Real-Time Updates | 9 |

| Savings Goals and Progress Tracking | 7 |

Your primary goal for the next six months is to increase the number of users who return to the app daily.

Now, analyze each idea with that metric in mind. “Saving goals and progress tracking” might not bring many users to the app daily – this feature is about long-term goals. So, let’s assign a 7 here.

Similarly, the Automated expense tracking doesn’t require the user to return to the app daily. On the flip side, this feature might encourage users to check the app daily to see their progress. Let’s assign it an 8.

Lastly, customizable and real-time budgets can significantly increase daily app engagement as users manage and track their expenses against their budget. We’ll assign 9 points to this feature.

The goal of this metric is to prioritize as many high-impact tests as possible. But, according to Sean Ellis:

… if some of them will take several weeks, or longer, to prepare for launch, then some easier tests should be slotted into the schedule.

Source: “Hacking Growth”, S. Ellis

Okay, but how sure can we be about the success of each experiment we labeled “impactful”?

This is where the ICE method’s second score comes into play:

Confidence: Use discovery

While assessing your confidence about a feature’s impact, you have two routes to take:

- Wild-guessing what your customers want

- Asking them

The first one is also known as “disaster.”

The second tells you if your idea will contribute to your key metric.

Of course, conducting surveys for all ideas would be extreme. But, as a Product Manager, you deal with many risky projects every day. After a while, your experience, knowledge, and understanding of the market become extensive enough to spot bad ideas.

You can also use any previous tests that showcase your users’ interest in a particular feature, or ask your marketing team about your customers’ most important values and goals.

Back to our example. You’re sure automated expense tracking will find many regular users, but only if it’s accurate and the categorization is effective.

When it comes to customizable budget creation, users will appreciate the immediate control and feedback on their spending. This strengthens the confidence about the feature’s impact.

Finally, setting saving goals can initially boost engagement, but it’s not a daily activity. This feature has the least potential to help you improve the key metric.

Here’s the updated ICE score table with assessed Confidence based on our analysis:

| Idea | Impact | Confidence |

| Automated Expense Tracking and Categorization | 8 | 7 |

| Customizable Budget Creation with Real-Time Updates | 9 | 8 |

| Savings Goals and Progress Tracking | 7 | 6 |

We’re getting there. Before we jump into the development, we need to make sure not to choose a feature that takes us a year to implement.

Let’s assess the last component of the ICE score:

Ease: Calculate the cost

Ease describes how much time and resources you need to make your idea happen.

It’s a reality check for you and your team that helps identify overly ambitious ideas or quickly deliverable low-hanging fruits.

Let’s finish the analysis for our budget management app:

| Idea | Impact | Confidence | Ease | ICE Score |

| Automated Expense Tracking and Categorization | 8 | 7 | 4 | 6.3 |

| Customizable Budget Creation with Real-Time Updates | 9 | 8 | 5 | 7.3 |

| Savings Goals and Progress Tracking | 7 | 6 | 6 | 6.3 |

The “Automated Expense Tracking and Categorization” requires connecting with multiple banking systems. Plus, you need to develop a robust categorization algorithm that provides insightful feedback for the user.

All of this can take lots of work and won’t happen overnight (caffeine won’t help here, too). Because of it all, we can’t assign a high score to this feature.

The second feature still demands much development work but includes no external integrations. This makes delivering it slightly easier, so we’ll increase the Ease score slightly.

The last feature doesn’t require many complex integrations or a robust update system. This gives it the highest Ease score out of all three features.

After some quick math, you’ll see “Customizable Budget Creation with Real-Time Updates” is the clear winner at helping you increase daily user returns to the app.

The problem is – we needed to spend quite a lot of time breaking down the process and analyzing each score (not applicable to fast readers).

Is there a way to make this process slightly less daunting?

ICE framework: a template that does the trick

Scoring your tasks with the ICE method should happen fast. You don’t need to grab a calculator and paper whenever you want to use it.

Here’s our method for incorporating ICE into your workflow and automating calculations for each new idea you consider.

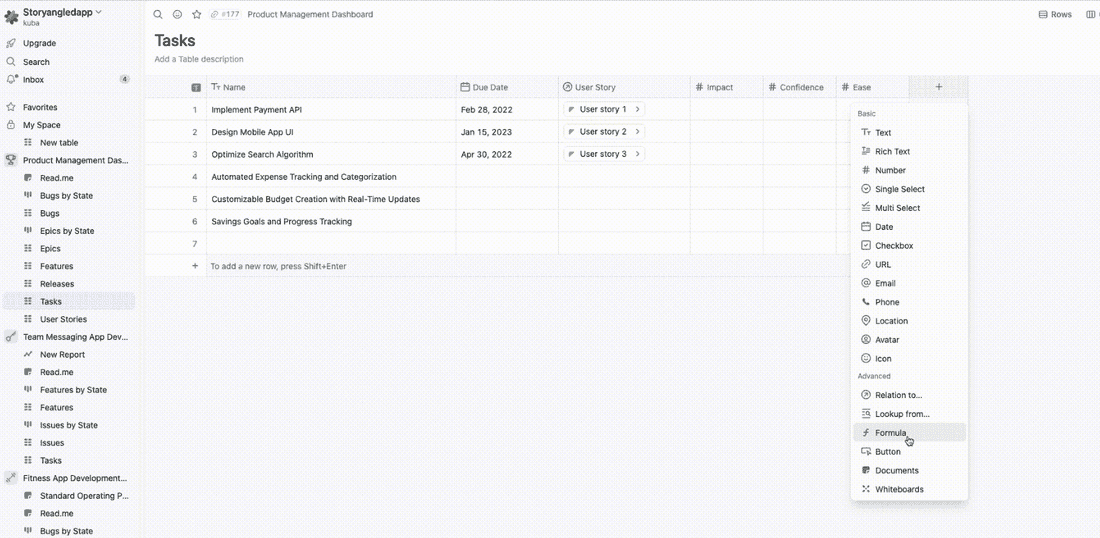

In your product management space in Fibery, set each component of the ICE method as a separate number:

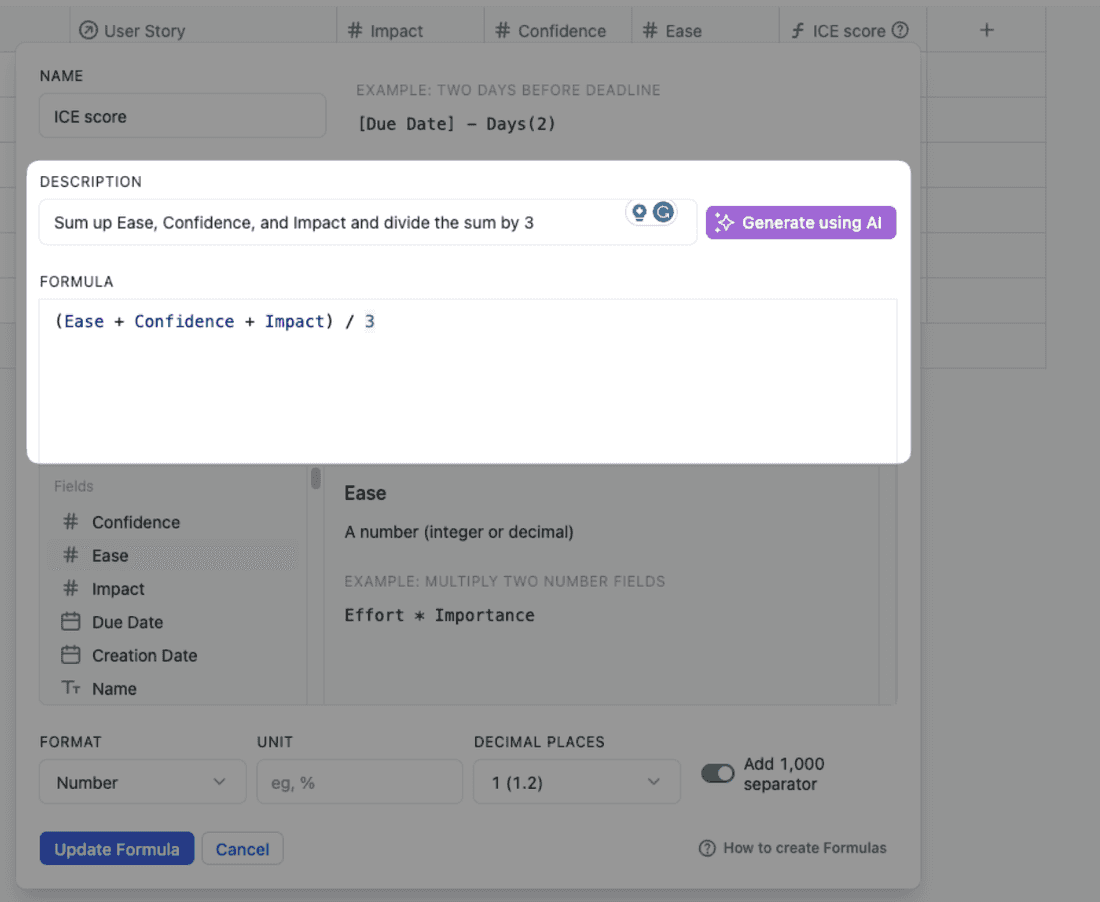

Next, create a custom formula in the new column:

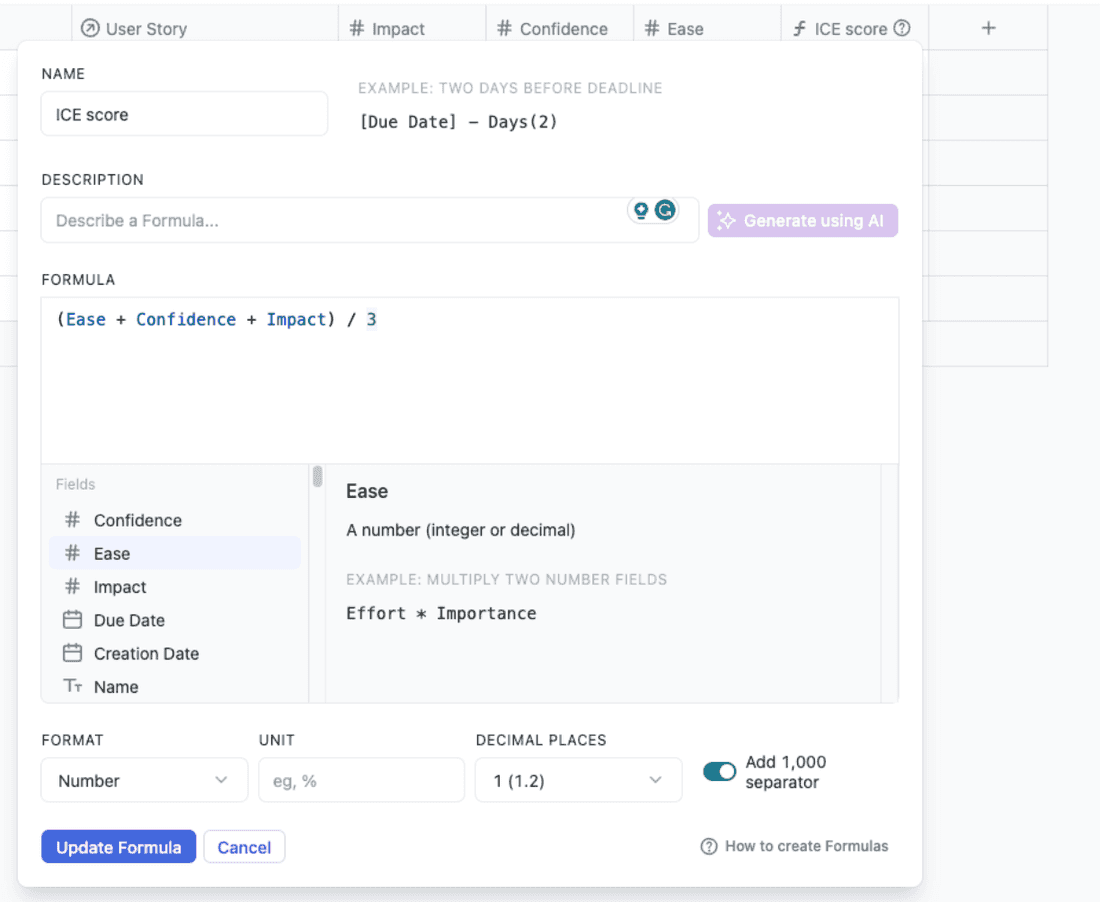

You can either copy the formula below and paste it into the formula window:

(Ease + Confidence + Impact) / 3

Or you can simply ask Fibery’s AI what you want to achieve and let it create a perfect formula for you:

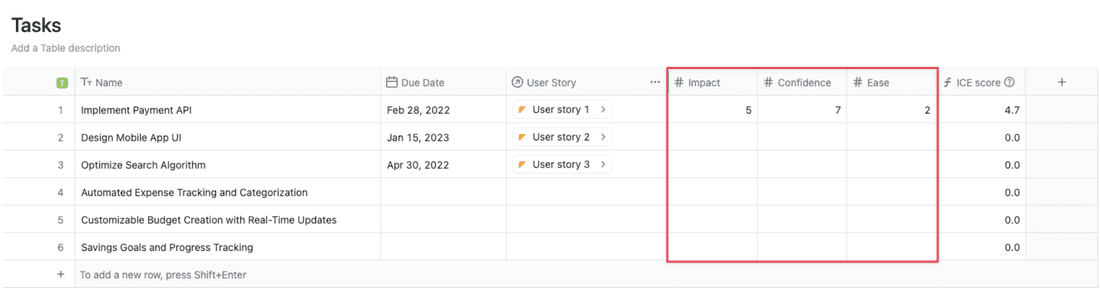

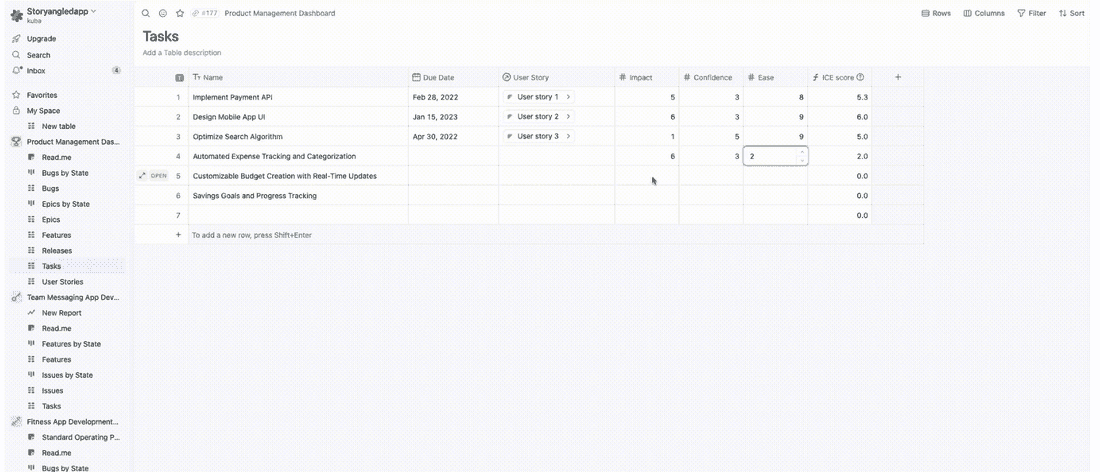

After you created the formula, return to the Tasks list and add the appropriate scores to each (you should know by now what “appropriate” means 👀):

Can we take a moment and appreciate how little math was involved in getting the score?

Fibery lets you automate calculating the ICE score across multiple tasks in your backlog. You can reach conclusions faster and increase the efficiency of conducting new experiments.

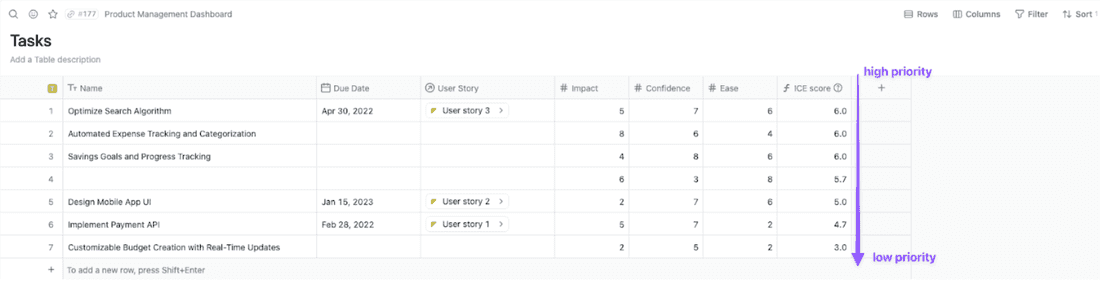

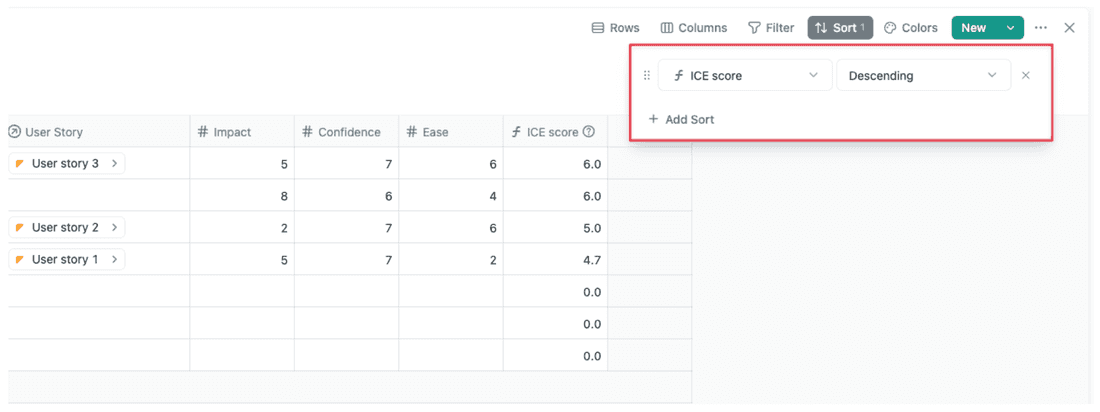

Lastly, you can arrange the tasks in descending order based on their ICE scores:

This will show you tasks with the biggest success potential first.

Let’s set this up in your workspace, too. With Fibery, you can be ready to score your first tasks in less than 5 minutes (we timed ourselves).

What’s the difference between ICE and RICE?

To be honest, many prioritization techniques are similar to each other. And when it comes to ICE vs. RICE, this seems to be the case.

The RICE framework stands for “‘Reach, Impact, Confidence, Effort.” At first glance, it seems almost identical to the ICE framework, with only one new component – Reach.

Well, things aren’t as simple.

First, both methods have different origins.

The RICE score was created specifically for feature prioritization. You should use RICE when you have several urgent features to implement and don’t understand which one to pick first.

The ICE method came from the growth hacking world and was primarily used for organizing growth experiments.

Second, the calculations for both methods slightly differ. For RICE, you multiply Reach, Impact, and Confidence, and divide it by the Effort:

Reach * Impact * Confidence / Effort

Reach expresses how many people your new feature or project will affect over a specific time.

For example: “800 users per month will use this feature” is how you’d describe the Reach for your app.

The Effort score (which replaces “Ease” from the ICE method) describes the labor costs as the number of “man-months”, weeks, or hours – depending on the needs.

Here’s how you’d assess effort for the budgeting app:

“Automated Expense Tracking and Categorization” will take about a week of planning, 3 weeks for design, and 6 weeks for development. The labor costs will be 2,5 person-months.

“Customizable Budget Creation with Real-Time Updates” will require a week of planning, a week of design, and two to three weeks of development. Here, labor costs drop to one person-month.

ICE excels at agile product experimentation when rapid feedback is key. The Ease score allows focusing first on tests that can be implemented quickly solo or by small teams.

RICE simplifies strategic planning and involves efforts across teams in the company.

So, ICE is the way to go for simple, one-team projects. But, when things get complex, the RICE scoring will deliver much more accurate results.

The PM’s hot take

If you are an early-stage startup’s PM, ICE can come in handy. It lets you sift through a plethora of features so that you can focus on delivering results fast. If you are late stage or working at an enterprise, the time-sensitivity of delivering results might be different - in that case, ICE is not an ideal tool.

Final Thoughts

When things reach high speeds in your Agile company, you can’t rely on tedious methods of planning your next step. The ICE method will help you spot any growth opportunities quickly and plan your team’s work for maximum efficiency.

The first step? Set up your ICE scoring template using Fibery and start organizing your work in less than 5 minutes.

Psst... Wanna try Fibery? 👀

Infinitely flexible product discovery & development platform.