RICE Framework & Scoring: a Guide to Successful Prioritization

As a CPO, product manager, or project manager, you’re probably familiar with the prioritization struggle. Are you focusing on real value? Will the product have the impact you need? Is this going to backfire? How do you justify your choices?

The RICE framework is one answer to the perennial question of how to prioritize the products and features for your product roadmap.

In this article, you’ll not only learn what the RICE framework is but how to apply it to up your product management skills.

What is the RICE framework?

The RICE framework is a prioritization scoring system that can help product managers figure out which of their products, features, and initiatives should take the top spots on their roadmaps.

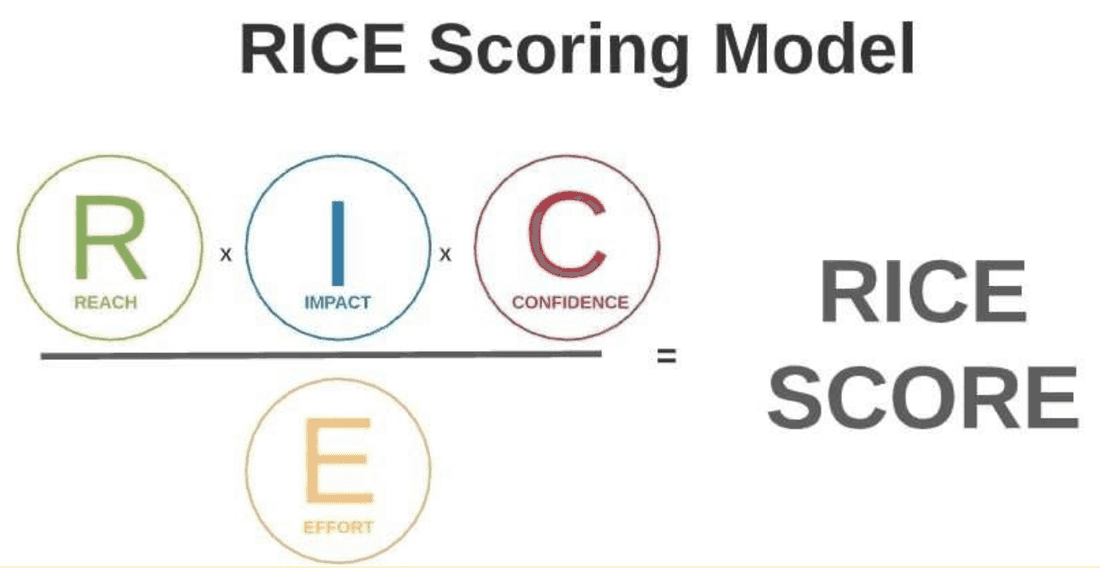

The four factors you score them on — reach, impact, confidence, and effort — create the acronym RICE. After you calculate these four factors, you then use the RICE formula to get a RICE score for each of your products or features. With a RICE score for each one, you have a consistent way to compare products or features in terms of their relative value, importance, or priority.

RICE not only allows you to compare competing features more objectively but it also provides data that you can use to communicate those comparisons to others. You can use it to explain your priorities to other key stakeholders who might have personal interests.

Knowing what to prioritize is vital in the bigger picture of release planning. Using RICE and other prioritization methods can help you to see what features will deliver the most value so that you can focus on them first.

Over time, the RICE scoring model can help you refine your decision-making process. You track the outcomes of tasks based on their RICE scores so you can learn from your choices and make better decisions in the future.

RICE explained letter by letter (with an example)

Below is the explanation of each factor and an example where you are trying to decide which features to prioritize for a new mobile app.

The mobile app is designed to help people manage their personal finances, and the two feature options are “Budget Tracking” and “Expense Visualization.”

What is reach in the RICE framework?

The first factor to calculate is the reach of your initiative. You have to decide both the definition of “reach” and the timeframe over which you want to measure it. Ask yourself: how many people do you estimate your product will reach in a given timeframe?

If you have data for this, you’ll likely get a much more accurate score than pulling numbers from the ether (or elsewhere). You can also try to gather data about your market or audience with surveys, market research, or any other applicable (and reliable) methods you might have available.

For example, you use market research to estimate how many people are in the current market for the app you are developing. You see, there are 10,000 active phone users. Out of the 10,000 people, around 7,000 report they would use an app with budget tracking features, and 6,000 say they will use the expense visualization feature based on surveys you sent out.

What is impact?

Impact is the brawn of the project, the power it has over individual users. Will it turn the tide, and if so, how much?

The impact can reflect a quantitative objective, such as the number of new signups for your app among potential users coming across it. It can also reflect a more qualitative goal, such as increasing stakeholder excitement.

You may want to use a similar scoring system to Intercom’s (the creator of the RICE framework). This is because they use numbers that truly separate the values and limit the number of values so that you can truly differentiate the relative numbers.

Like, can we, as mere humans, really differentiate the value between scores such as 93 vs. 94? Nope. You need to choose numbers that truly distinguish between relative values because you want to have enough clarity to differentiate among the pile of features to prioritize.

Also, document your assumptions and calculations, as you might refer to them later when you use RICE for a different feature.

Here’s the scale that Intercom uses for impact:

- 3 = Massive impact (possibly market-breaking 🚀)

- 2 = Medium-high impact

- 1 = Valuable but not going to stand out too much

- 0.5 = Will make a small impact

- 0.25 = Nice to have, but will probably lose users 😶🌫️

Of course, you can build your own scale; just choose (and define the meaning of) a few numbers that truly differentiate each factor.

For the app example, your impact scores will evaluate how each feature will impact the user’s experience of your app.

Say you do market research and see that budget tracking is in high demand and low supply, especially within your market, so you give it a score of 2. If you did a soft launch or got some honest user feedback, which is positive, you could lean towards a 3.

Expense visualization, while valuable, doesn’t seem to excite anyone in your demographic, but since the survey showed some interest, you’ll score it at 1.

What is confidence?

Confidence is the level of certainty that you have about your estimates of reach and impact, expressed as the following percentage: 50% (low), 80% (medium), and 100% (high). With Intercom’s scale, you can easily differentiate between the numbers as opposed to trying to distinguish between 72% and 84%. Simple limited choices keep the process Agile and avoid dilemmas that can slow them down.

The confidence factor of your RICE score helps you to compensate for projects where you have data to support one factor of your score, but you are relying more on instinct for another.

For example, if you have data backing up your impact estimate but a gut feeling or hearsay evidence backs up your reach score, your confidence score will help compensate for this. In this case, you likely won’t give this product or feature a high-confidence score. This also works the other way and makes it slightly more accurate. If you have data on (both) your reach and impact, you might be even more certain about your confidence score.

For your app example, the confidence is based on the appeal that your feature will have on the customers.

Let’s say your user surveys and market analysis show a high interest in budget tracking, giving you 100% confidence in this feature. For expense visualization, you have less certainty, so your confidence level is 80%.

What is effort?

Effort is the amount of work it takes to complete a product or feature. The exact definition of effort might vary, but most project and product managers use time and budget as their main metrics to measure against.

Effort is the only negative element within the RICE framework and the one you divide the other factors against. Yep, the feature is fantastic. But it will take your whole team of 10 a year (um, 120 person-months). So the effort might drag down that crazy high-value feature.

For your app example, you have to look at the amount of effort that you need to exert to get the feature to market.

Here’s how you can analyze this:

- Budget tracking effort: You’ll have extensive backend and frontend development; you’ll need to integrate it with financial data sources and undergo thorough testing, which you estimate can take up to 400 hours or three months.

- Expense visualization effort: Some frontend work and data visualization, plus integration with the current database, which you estimate at 150 hours or one month.

How to calculate a RICE score

Now, to calculate the RICE score, you use this formula:

- RICE Score = (Reach x Impact x Confidence) / Effort

The higher the RICE score, the higher the priority.

Let’s calculate the RICE score for your app example:

- Budget Tracking’s RICE Score: (7000 x 2 x 1) / 3 = 4,666

- Expense Visualization’s RICE Score: (6000 x 1 x 0.8) / 1 = 4,800

Based on this analysis, Expense Visualization has a higher RICE score.

However, because the impact and confidence of budget tracking are high, you’ll need to validate this before you decide to move forward with it. You could also look into a few ways to reduce the amount of effort for that feature. You can use a soft launch to check the impact further, or you can use more resources to drive down the effort.

How can I use the RICE prioritization framework?

The RICE prioritization method relies on quantifying each factor: reach, impact, confidence, and effort. This means your decisions might be based on subjective opinions and inaccurate data. We can’t call it data-driven unless you have all the correct numbers and the number spread has worked for you in the past. If it does, then great, you have an efficient scoring system! 🥳

In cases where it may be subjective, it still turns your estimates into numbers, which are more accessible and reliable to compare than a gut feeling. Could you justify buying a burger over a salad if you had a score that compared the health benefits of each meal? Probably not. But you could justify it if you were scoring them based on taste. Each feature aims to set the bar for the scoring system, which then acts as a guide for the choice (burger or salad).

RICE can help prevent some bias, like pet projects or the shiny object just out of reach. It also gives you a way to let everyone know the quantifiable reasons you are going after a feature: the salad vs. the burger. Doing this means you also remove some assumptions that clients and team members might have and align them around a common goal since they have a clear feature framework to follow.

When you do a “RICE analysis,” you’ll do more discovery to increase confidence, support more decisions with data, focus on impact, and strive for customer centricity through reach, which also encourages product development best practices.

You can make this whole process super simple using Fibery’s product management template, which houses a RICE prioritization feature: Product management template.

Should I use the RICE framework?

If you have enough data to support each element, then RICE can be beneficial for all the reasons that were just covered.

However, RICE ain’t perfect as a framework; it has some limitations and challenges:

- RICE can take a lot of time and is tricky to apply, especially if you have tons of roadmap items or if you need to validate a lot of data from many sources.

- RICE can be misleading or inaccurate if you rely on inaccurate or outdated data or underestimate or overestimate your factors.

- RICE can be subjective if you take a biased approach to estimating your reach, impact, confidence, or effort. This can lead you to believe your decisions are data-driven when in actual fact you already influenced the data points yourself.

You should continually examine and adjust your scores and use customers’ and users’ feedback to check the accuracy of your ideas and theories. If the calculations seem off, then you’ll need to try to validate them; don’t just stick to them because that’s how you scored them. There could be something you missed.

There are several frameworks and methodologies you can use to prioritize features. Some work very well with the RICE method, and some can stand alone as their own prioritizing method.

Here are two notable options:

WSJF

The first tool is called the Weighted Shortest Job First prioritization technique. It was first developed as a way to order work to be done to minimize the cost of delay – how much value are you giving up if the item is delayed by a certain amount of time (duration)? So if an item is worth $150,000 per month in value, and it is delayed by 2 months, the cost of delay is $300,000.

WSJF was later modified in the Scaled Agile Framework (SAFe) to refine the definition of business value and change other factors, like substituting level of effort for duration. It has two key variables to classify tasks:

- Weighted: The weighted impact that the work or task item has on the overall project goal.

This variable has three weighted elements: user or business value, the importance of doing the “thing” now rather than later, and how the thing positions you for a follow-on opportunity or lowers your risk

- Shortest: The level of effort required for the job. So the “weighted” factor is divided by the scaled effort factor

The point of WSJF is, therefore, to tackle first the items with the greatest value and the least effort.

That sum is then divided by the size of the job (effort). Scoring the ‘thing” on these factors gives you a view of what to tackle “first.”

So what is the “thing”? Some would include products, features, and projects as valid “things”.

The Project Management Institute (PMI) advises that WSJF “should only be applied to items that will realize value when released.” In its view, features by themselves don’t deliver value. It recommends applying WSJF to minimum increments or releases that deliver value (and of course, to products and projects).

Because WSJF evaluates both value and task time (effort), it can be beneficial for both linear and iterative product development. So WSJF is used to identify the “things” that bring the highest value in the shortest amount of time.

MoSCoW method

The MoSCoW approach is a four-step model that helps to identify products or features that offer the greatest ROI.

MoSCoW stands for must, should, could, and won’t—the o’s (only) make the acronym easier to say.

The steps for MoSCoW include:

- List all the features.

- Evaluate each feature on user desire for the feature, the difficulty of implementing it, and how much it will enhance the product.

- Plot the features on a chart with the four quadrants for Must, Should, Could, and Won’t.

- Prioritize the features based on where they appear in the chart.

Combining MoSCoW with RICE can yield high-quality releases as you factor in ROI. For example, after you do your RICE score for the app features, you might see that the higher impact feature can deliver more value or ROI over a longer period of time.

The PM’s hot take

When you do a RICE “analysis,” you’ll likely get a list of impactful features. These can be your game changers. If you can focus and deliver on these, you’ll get the results you seek.

“Impact” should rely the least on gut feeling. If you can’t find data to input into your scores, corroborate the scores with a few team members. If their scores are way off, you’ll probably have to try a different technique like MoSCoW because the estimates are too vague. If you do get a score that matches or is at least in the same ballpark as your own, then you’ll know that feature can have a high impact.

The impact score can sometimes be more critical than high RICE scores, and there are many ways to work around effort and reach. Don’t just drop any feature or product with a high impact because it got a low RICE score.

Wrap up

RICE can reveal why to choose a certain feature or product and help explain the choice to others.

Use the RICE framework as a guide that helps you gauge the most critical features that align with your business objectives, but also take it with a pinch of salt and try to validate the results before you dive right in.

Fibery is a product management platform that delivers an all-in-one solution. It integrates the functions of multiple tools you might use now. But because the tools are separate, they isolate the info you need at your fingertips right now. Fibery doesn’t.

Fibery also contains many prioritization features like RICE, WSJF, and more, which can help drive your product management process.

Sign up here and try the free version to discover what Fibery can do for your product development. See if you love it.

Psst... Wanna try Fibery? 👀

Infinitely flexible product discovery & development platform.